At Google, like any big company, we did OKRs every quarter. We all knew doing this exercise was important, and we all knew that it would determine our team’s performance at the end of the cycle. What I failed to deeply understand is the true meaning behind OKRs, the history of it and why we need to spend high quality time in crafting the OKRs. I could give you the textbook answer for why we need OKRs, but I don’t think I ever truly got it, until now. I often like to re-derive existing concepts or re-solve already solved problems myself from the ground-up. This helps me then better grasp and appreciate the original. So I asked myself: How would I design the OKR process myself from the ground-up? And here’s what I came up with: There are 5 phases in the OKR lifecycle: Formation, Communication, Execution, Evaluation, Retrospection.

Formation: This phase can be structured into the following steps:

- #1 Pull: from higher level OKRs and business goals. Sometimes these will be set by your product owner or your parent org.

- #2 Depixelate: by diving into the next level of detail and flesh out granular OKRs for your org that will serve the top level OKRs. This is where you, as the leader, will make strategic prioritization decisions, including saying No to a lot of things.

- #3 Align: with stakeholders like managers and ICs on your team, x-functional partners, x-org, partners, leadership, etc.

Iterate quickly through #1 #2 #3 until all stakeholders parties sign off

- #4 Percolate: into your org by asking managers/leaders reporting to you, to form their org’s OKRs

Communication: This is probably the most important step for having truly “aligned” organizations where everyone is rowing in one direction with clear purpose and connection to the bigger mission. It is your job as the leader, to first truly understand the connection and communicate it the best you can to your team and stakeholders. This is where your skills as an effective communicator will be crucial. Most leaders will share a deck and walk through the OKRs in a meeting, but good communicators will go a step further and connect the OKRs to a clear purpose. Note that I am choosing the work purpose, and not business goals or objectives. When the team has a purpose attached to the OKRs, it makes magic happen.

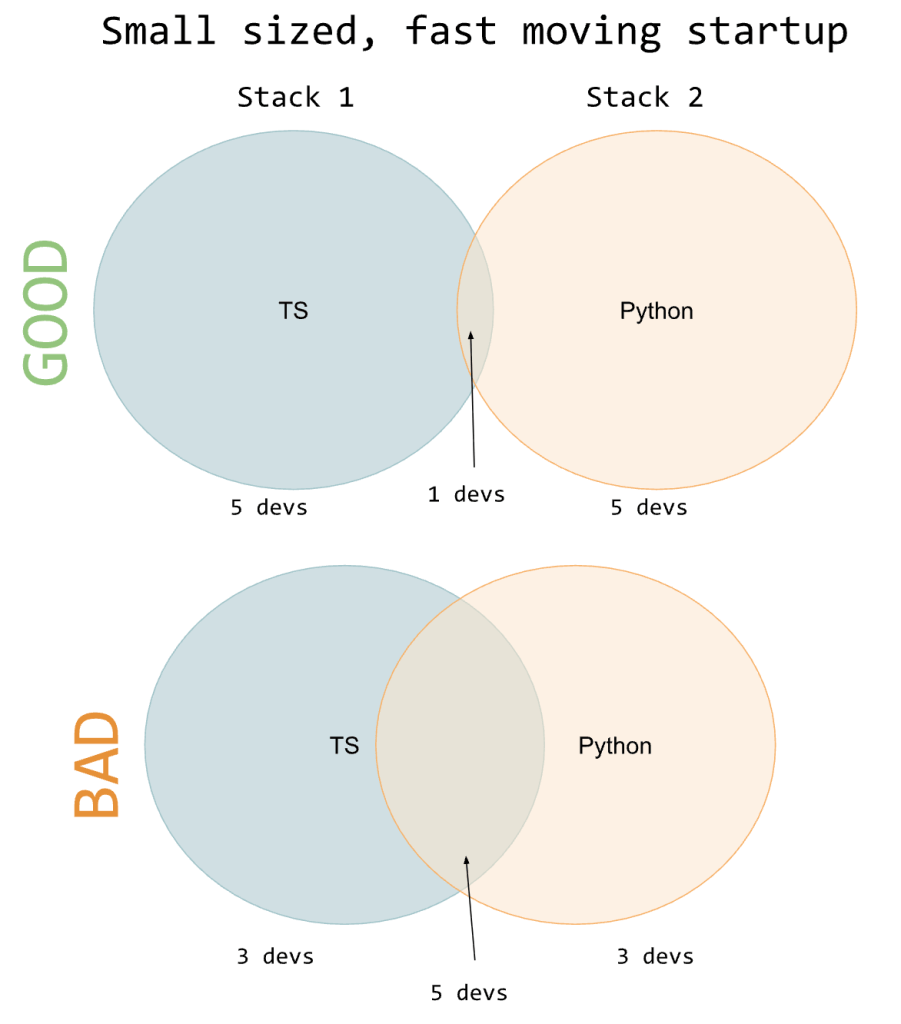

Execution: This is where you trust the team and let them run – always trust but verify. There are entire books written about execution, so I will not delve into that too deeply here. This is the longest phase of the OKRs lifecycle. In startups and smaller, fast moving teams, there needs to be a tight feedback loop with senior leadership during this phase, so that OKRs can be tweaked mid-cycle if needed.

Evaluation: In this phase the OKRs get evaluated from top to bottom based on whatever grading rubric your company uses. In Google, OKRs are graded on a scale of 0-1. OKRs are set to be a bit of a stretch so that a score of 0.7 is considered acceptable. How you set and grade OKRs will define the output and impact of your org.

Retrospection: This step needs to happen recursively in your sub-teams and orgs as well. One of the biggest challenges here is that not everyone is good at retrospectives, or are motivated to do it. It is extremely critical that you identify the right person upfront to lead these retros in each of your subgroups. In bigger companies, you will often have a x-functional TPM or PM partner who will be thrilled to help you with this. I’d highly recommend involving them in this exercise.

Relevant Reads